Finance & Business

Microsoft's $200 Billion Nightmare: Azure Outage Takes Down 365, Xbox, Starbucks, Airlines—Just Hours Before Earnings Call

Microsoft’s $200 Billion Nightmare: Azure Outage Takes Down 365, Xbox, Starbucks, Airlines — Just Hours Before Earnings Call

Something every CIO fears happened on October 29, 2025: a configuration change in Microsoft’s cloud stack cascaded into a global Azure outage that knocked out Microsoft 365, Xbox services, and dozens of dependent businesses — from Starbucks to multiple airlines — at the worst possible time: just hours before Microsoft’s quarterly earnings release. The outage is a sharp reminder that when critical infrastructure fails, the ripple effects reach far beyond 404 pages — they hit revenue, operations, and investor confidence.

AP News

+1

What happened (quick summary)

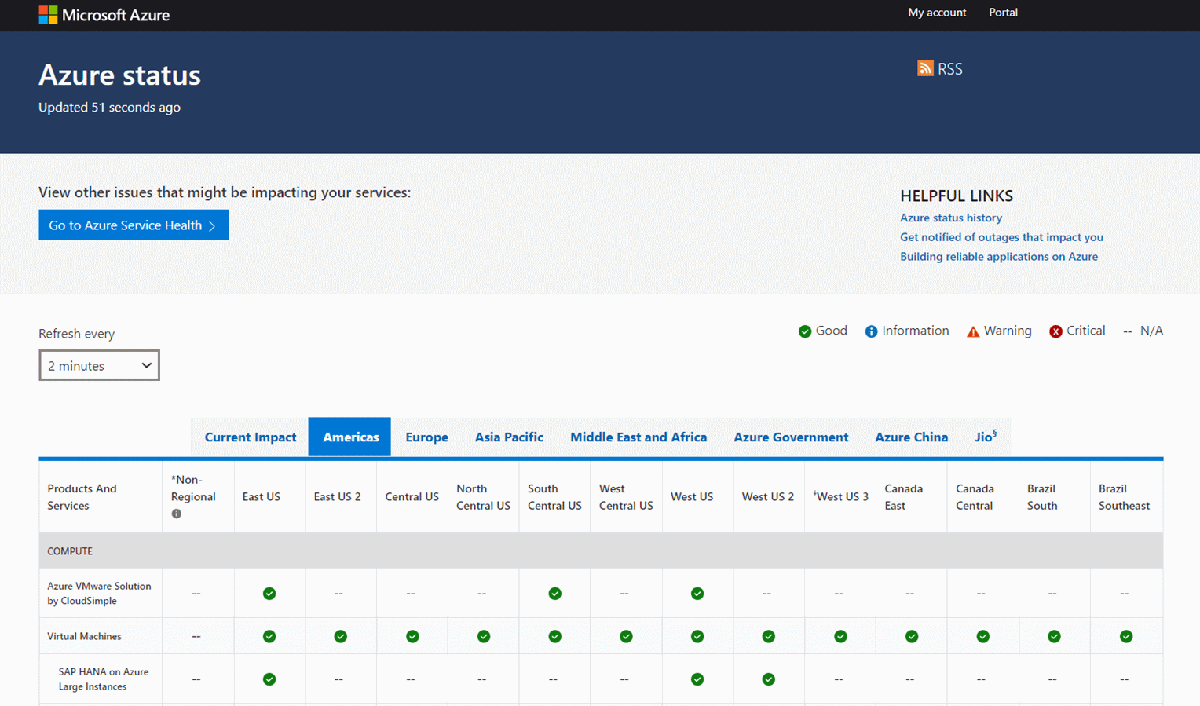

Beginning around 16:00 UTC, customers worldwide reported inability to access the Azure Portal and dependent services. Microsoft traced the disruption to issues with Azure Front Door (its global content delivery and routing layer) after what it described as an inadvertent configuration change; that, in turn, created DNS and routing problems for services that relied on AFD. The outage degraded access to Outlook/Teams, Microsoft 365 apps, Xbox Live, Minecraft, Copilot, and third-party apps and websites that sit on Azure.

AP News

+1

Who got hurt

This wasn’t a niche developer hiccup — it hit consumer-facing and mission-critical services. Starbucks reported app and POS disruptions in some locations, and multiple airlines (including Alaska and Hawaiian) said check-in and web/app functions were affected, forcing manual workarounds at airports. Retailers and grocery chains with Azure-hosted systems also logged problems, and millions of Microsoft 365 users experienced access and authentication issues. The outage even struck aftershocks in government and essential services in certain markets.

WLKY

+1

Why timing made it worse: the earnings call specter

Outages are expensive; outages in the cloud era are also visible. This incident came just hours before Microsoft’s quarterly earnings release — a moment when investors and analysts scrutinize every operational signal. Temporary downtime can feed negative press, prompt questions about reliability and platform risk, and even nudge short-term market moves. Given Microsoft’s multi-hundred-billion-dollar market cap, the optics of a global service disruption the same day as an earnings report was immediately framed by many outlets as a “$200 billion nightmare” — shorthand for the market value that could wobble in reaction to perceived platform fragility.

Yahoo News

+1

Cost beyond the headlines

Putting a dollar figure on damage is messy. Direct revenue loss (e.g., interrupted transactions), operational costs (extra manual labor, ticket refunds, airport delays), and the intangible hit to customer trust all add up. For enterprise customers, an outage can mean lost productive hours, missed SLAs, and expedited engineering costs to reroute systems. For Microsoft, repeated high-visibility incidents are also a reputational risk that competitors and customers will use in procurement conversations — particularly in sectors where uptime is non-negotiable.

Bloomberg

+1

What Microsoft did and what comes next

Microsoft deployed a fix and reported staged recovery, restoring Azure Front Door availability to the high 90s percent and continuing to monitor final “tail-end” issues. The company attributed the outage to an internal configuration change and emphasized mitigation steps. Expect a detailed post-incident report from Microsoft (root cause, timeline, remediation, and preventive measures) — the kind of transparency enterprise customers demand after a large outage.

AP News

+1

Lessons for businesses and cloud planners

Assume failure, design for graceful degradation. Architect apps so essential flows can degrade locally if CDN/routing layers fail.

Multi-region and multi-provider contingencies. Heavy reliance on a single cloud provider or single front-door routing path raises systemic risk.

Runbook readiness. Test manual/automated playbooks for cutover scenarios regularly; when airports and cafes have to fall back to paper, preparedness reduces chaos.

Comments (0)

Please log in to comment

No comments yet. Be the first!